Level up your memcached services with routing and handle any load.

Website hosting performance quite often ends up being a matter of applying effective caching layers. Although there’s compelling key-value in memory data store services to choose from out there, memcached continues to be a popular choice. Its popularity’s due to its characteristics which include stability, effectiveness, simplicity, compatibility, plus it’s easy to use.

Memcached has been battle tested for many years in mission critical applications and has proven itself as a trusted solution. Things start to turn ugly though, when the service needs to be scaled up, as it wasn’t designed with scaling in mind. The scaling problem can be handled quite efficiently by using the mcrouter that Facebook developed in order to solve its own scaling problems and later released to the public as opensource software.

Mcrouter is a memcached protocol router that can actually turn memcached into a distributed system, adding redundancy layers and making it able to scale in size and performance as needed.

Here’ some examples of configuration schemes with mcrouter in order to show what’s possible.

Shard data across multiple memcached nodes

If the working set doesn’t fit into a single memcached server, we can shard the data across many memcached servers and scale the size of the service efficiently.

Prefix routing

Each request can be served by the appropriate memcached pool of servers. The requests can be separated and routed to different memcached pools based on key prefix. This way we can select the size, performance, and redundancy level of the memcached pool that’s needed for each class of keys.

Replicated pools for read intensive workloads

Replicated pools write all data into all nodes while reads are being routed to a single replica chosen separately for each client. This is useful when the single memcached node can’t handle the read load and the service needs to be scaled.

Scale by combining local memcached service and remote shared memcached pool

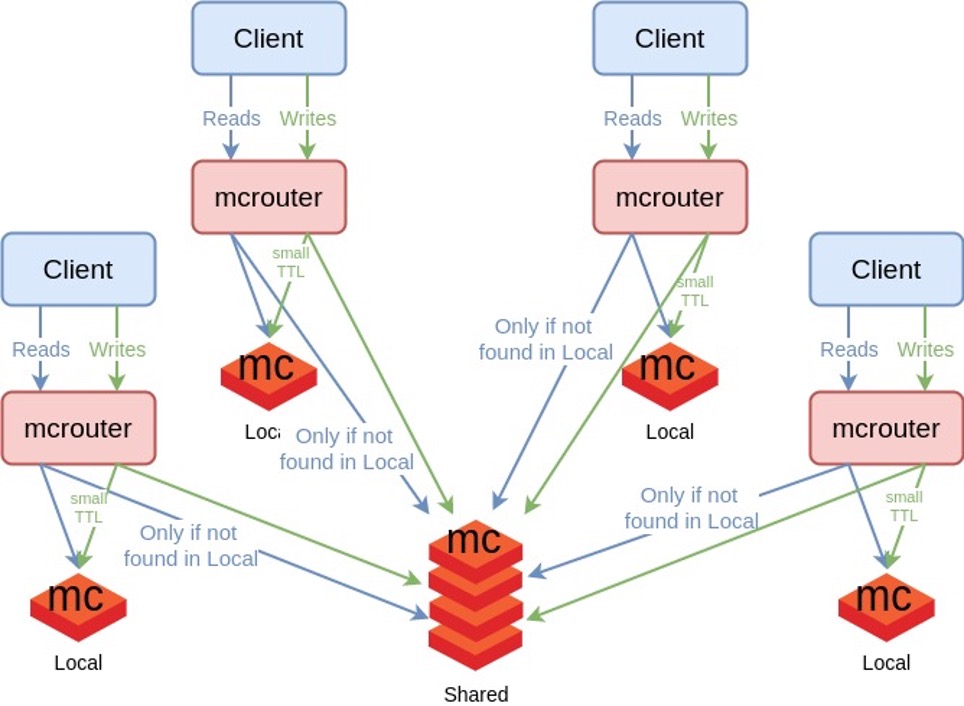

If the service can tolerate stale data for a short period of time, it’s possible to use a configuration like the following and gain significant advantages on scaling the service "cheaply".

Here we have a locally installed memcached and a shared memcached poll. The "writes" from each client will go to both the "local" and the "shared" memcached pool. Gets will go only to the "local" memcached first, the "shared" pool will be checked only in case of a miss. If the shared pool returns a hit, then the response is returned to the client and an asynchronous non-blocking request updates the "local" memcached. In the "local" memcached the writes are being set with a small TTL (exptime). This TTL is the time we can tolerate stale data in this particular service (in most cases this time will be a few seconds).

This configuration has the advantages of lower latency and scale without requiring an oversize shared memcached pool, because a significant percentage of the "Gets" will be served by a local memcached service.

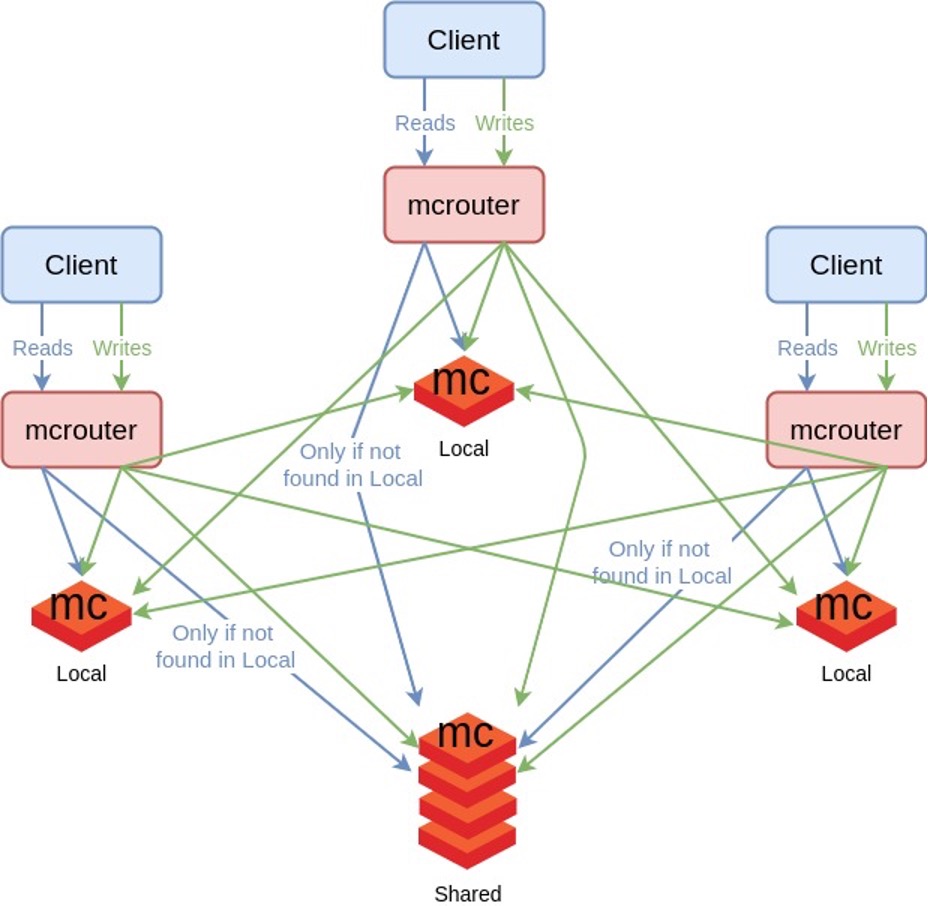

Scale by combining local and remote shared pool but without tolerate stale data

The requirement of the previous configuration to tolerate stale data for a brief period of time can be overcome by replicating all writes to all nodes, local or remote. This will have an increased cost in "write" traffic, where it will increase by N-fold, but this may be worth it because of its advantages.

Some more features of mcrouter worth mentioning are the following:

-

Destination health check:

Mcrouter is able to check the health of each destination and if one is unresponsive then it will fail over the requests to an alternative destination.

-

Cold cache warm up:

Mcrouter is able to fill up a new empty started memcached service or even an entire new cluster and thus make it "warm" before it can be put to service.

-

Online reconfiguration:

You can be modifying the configuration of a running mcrouter and it will automatically reload the configuration changes and apply them.

Mcrouter is like a "Swiss Army Knife" for routing memcached requests, offering impressive flexibility and complex routing schemes, making it suitable to meet very complex and demanding requirements. If you need to scale your memcached service you should definitely consider it.

Take a look at our Hosting and Support services to see where we can help.